ShardingSphere-JDBC

采用Docker实现

主从复制实现

- 新增主节点配置文件

mkdir -p /wolfcode/mysql/write_db/conf

vi /wolfcode/mysql/write_db/conf/my.cnf

#新增内容如下

[mysqld]

#服务器唯一id,注意在集群中不要出现一样的

server-id=1

binlog_format=STATEMENT

log-bin=master-bin

log-bin-index=master-bin.index- 启动MySQL主节点

docker run -d --name write_db -p 3306:3306 \

-v /wolfcode/mysql/write_db/conf:/etc/mysql/conf.d \

-v /wolfcode/mysql/write_db/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root \

mysql- 进行master容器,登录mysql

docker exec -it write_db /bin/bash

mysql -uroot -proot

#查看主节点状态

show master status;- 在mysql内部创建slave同步账号

-- 创建slave用户

create user 'slave_user'@'%';

-- 设置密码

alter user 'slave_user'@'%' identified with mysql_native_password by 'root';

-- 授予复制权限

grant replication slave on *.* to 'slave_user'@'%';

-- 刷新权限

flush privileges;- 从节点1配置信息

#退出mysql和docker

exit; exit;

mkdir -p /wolfcode/mysql/read_db0/conf

vi /wolfcode/mysql/read_db0/conf/my.cnf

#新增内容如下

[mysqld]

#服务器唯一id,注意在集群中不要出现一样的

server-id=2

relay-log-index=slave-relay-bin.index

relay-log=slave-replay-bin

#启动从节点1

docker run -d --name read_db0 -p 3307:3306 \

-v /wolfcode/mysql/read_db0/conf:/etc/mysql/conf.d \

-v /wolfcode/mysql/read_db0/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root \

mysql

#进入从节点容器,并登录mysql

docker exec -it read_db0 /bin/bash

mysql -uroot -proot

#进入mysql执行命令

#docker inspect -f '{{.Name}} => {{.NetworkSettings.IPAddress }}' $(docker ps -aq) 查看ip

change master to master_host='172.17.0.2', master_user='slave_user', master_password='root', master_port=3306, master_log_file='master-bin.000004', master_log_pos=156;

#启动从节点1并查看状态

start slave;

show slave status \G # Slave_IO_Running: Yes Slave_SQL_Running: Yes- 从节点2配置信息

#退出mysql和docker

exit; exit;

mkdir -p /wolfcode/mysql/read_db1/conf

vi /wolfcode/mysql/read_db1/conf/my.cnf

#新增内容如下

[mysqld]

#服务器唯一id,注意在集群中不要出现一样的

server-id=3

relay-log-index=slave-relay-bin.index

relay-log=slave-replay-bin

#启动从节点2

docker run -d --name read_db1 -p 3308:3306 \

-v /wolfcode/mysql/read_db1/conf:/etc/mysql/conf.d \

-v /wolfcode/mysql/read_db1/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root \

mysql

#进入从节点容器,并登录mysql

docker exec -it read_db1 /bin/bash

mysql -uroot -proot

#进入mysql执行命令

#docker inspect -f '{{.Name}} => {{.NetworkSettings.IPAddress }}' $(docker ps -aq) 查看ip

#show master logs; 查看主节点log日志名

change master to master_host='172.17.0.2', master_user='slave_user', master_password='root', master_port=3306, master_log_file='master-bin.000004', master_log_pos=156;

#启动从节点2并查看状态

start slave;

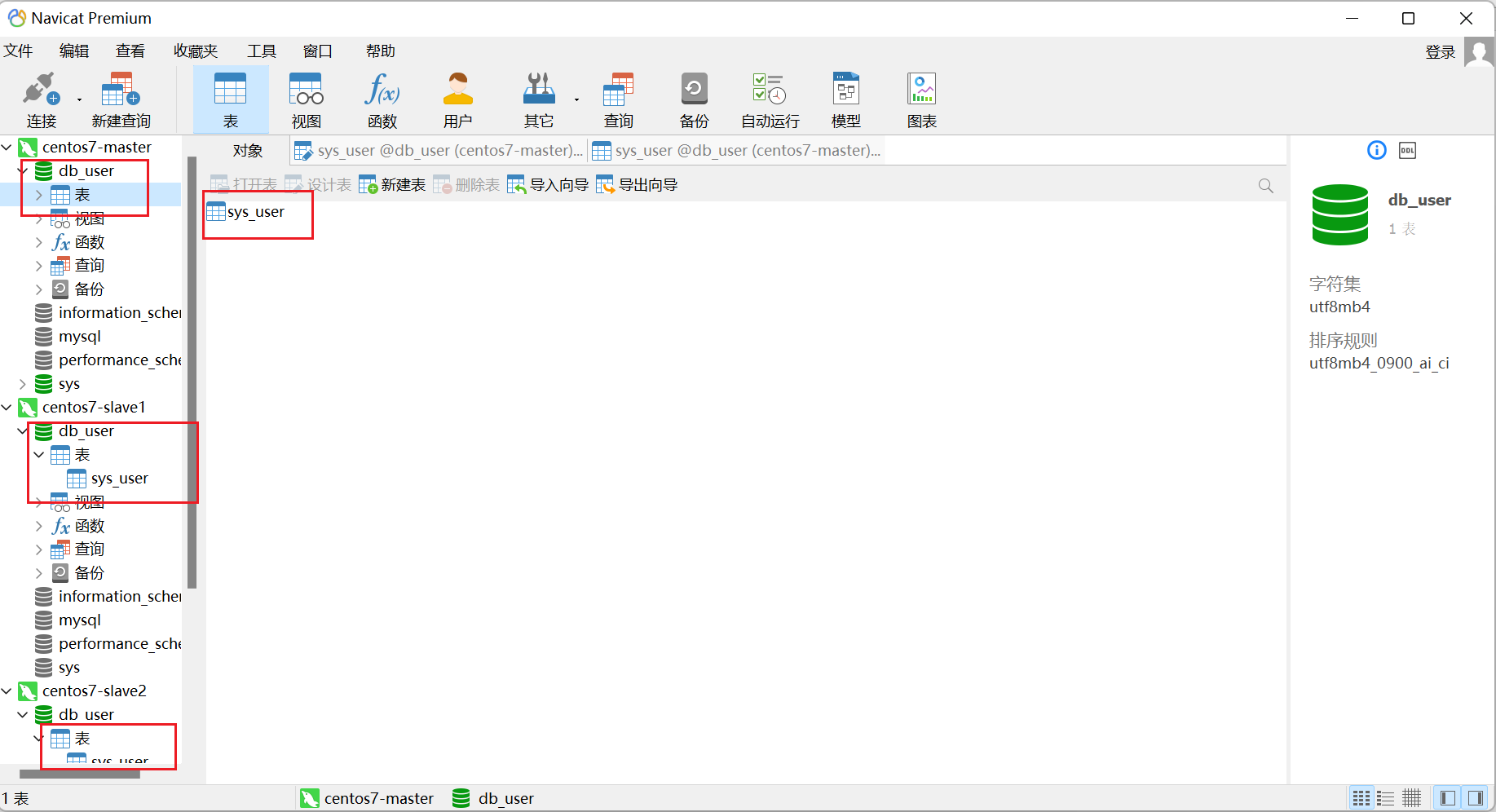

show slave status \G # Slave_IO_Running: Yes Slave_SQL_Running: Yes创建完成后连接三个数据库,主表的变化,从表都会进行更新 --> Ip地址相同,端口不同3306,3307,3308

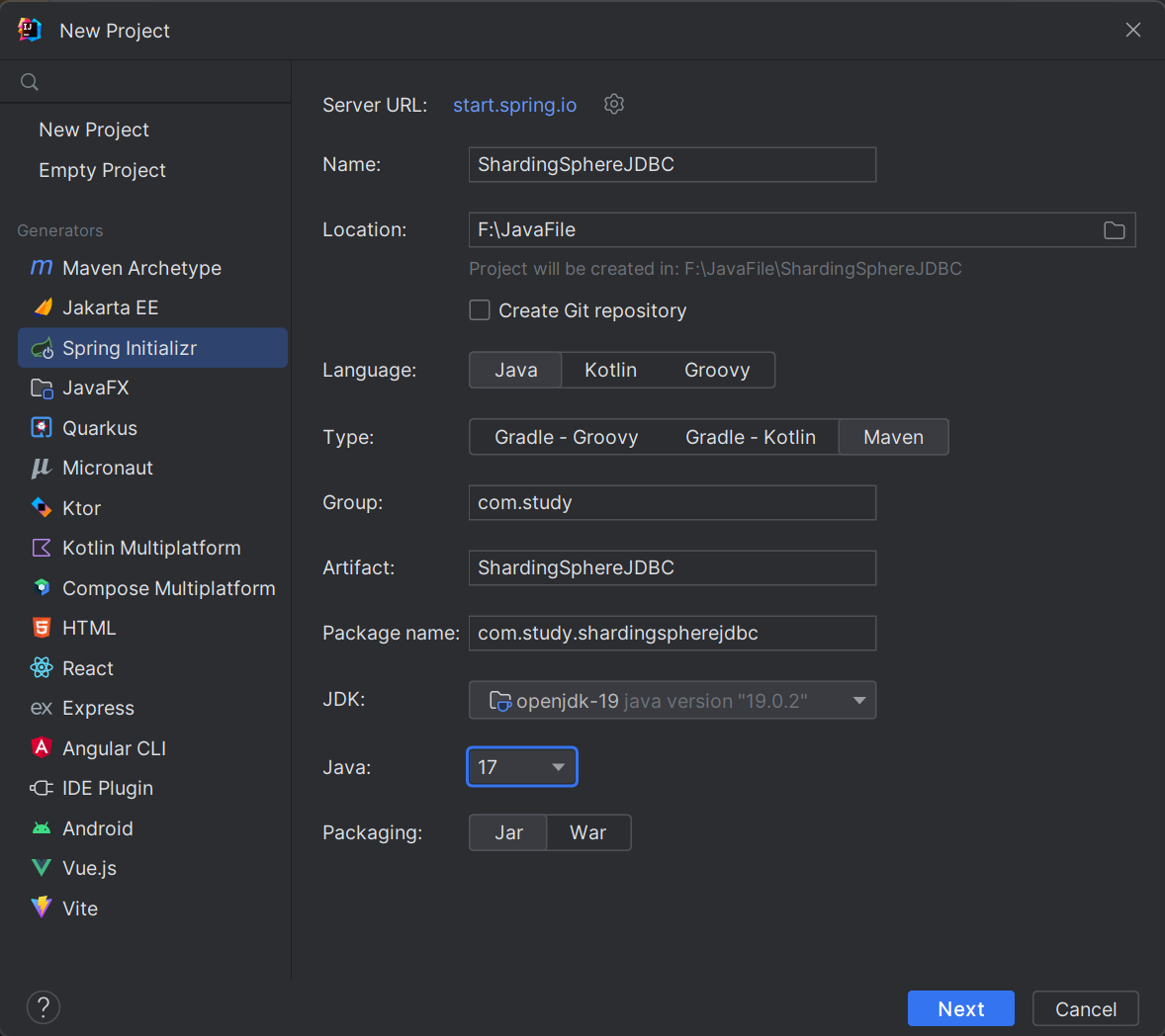

- 使用ShardingSphere-JDBC(springboot框架)

引入maven依赖

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>shardingsphere-jdbc-core</artifactId>

<version>5.3.1</version>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.5.3.1</version>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-generator</artifactId>

<version>3.5.3.1</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.27</version>

</dependency>

<dependency>

<groupId>org.freemarker</groupId>

<artifactId>freemarker</artifactId>

<version>2.3.28</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.yaml</groupId>

<artifactId>snakeyaml</artifactId>

<version>1.33</version>

</dependency>配置

#在resources目录下新建sharding.yaml

#数据源配置

dataSources:

write_db:

dataSourceClassName: com.zaxxer.hikari.HikariDataSource

driverClassName: com.mysql.jdbc.Driver

jdbcUrl: jdbc:mysql://192.168.31.128:3306/db_user?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=Asia/Shanghai&useSSL=false&allowPublicKeyRetrieval=true

username: root

password: root

read_db0:

dataSourceClassName: com.zaxxer.hikari.HikariDataSource

driverClassName: com.mysql.jdbc.Driver

jdbcUrl: jdbc:mysql://192.168.31.128:3307/db_user?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=Asia/Shanghai&useSSL=false&allowPublicKeyRetrieval=true

username: root

password: root

read_db1:

dataSourceClassName: com.zaxxer.hikari.HikariDataSource

driverClassName: com.mysql.jdbc.Driver

jdbcUrl: jdbc:mysql://192.168.31.128:3308/db_user?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=Asia/Shanghai&useSSL=false&allowPublicKeyRetrieval=true

username: root

password: root

rules:

# 读写分离

- !READWRITE_SPLITTING

dataSources:

readwrite_ds:

staticStrategy:

writeDataSourceName: write_db

readDataSourceNames:

- read_db0

- read_db1

loadBalancerName: random

#负载均衡

loadBalancers:

random:

type: RANDOM

#显示sql日志

props:

sql-show: true

#application.yml

spring:

datasource:

driver-class-name: org.apache.shardingsphere.driver.ShardingSphereDriver

url: jdbc:shardingsphere:classpath:sharding.yamltest下新建一个Generator.java,用于mybatis-plus 代码生成

public class Generator {

@Test

void generatorDomain() {

FastAutoGenerator.create("jdbc:mysql://192.168.31.128:3306/db_user?serverTimezone=UTC&useSSL=false", "root", "root")

.globalConfig(builder -> {

builder.author("canace") // 设置作者

// .enableSwagger(f) // 开启 swagger 模式

.fileOverride() // 覆盖已生成文件

.outputDir("F:\\JavaFile\\ShardingSphereJDBC\\src\\main\\java"); // 指定输出目录

})

.packageConfig(builder -> {

builder.parent("com.study.shardingspherejdbc") // 设置父包名

.moduleName(null) // 设置父包模块名

.pathInfo(Collections.singletonMap(OutputFile.xml, "F:\\JavaFile\\ShardingSphereJDBC\\src\\main\\resources")); // 设置mapperXml生成路径

})

.strategyConfig(builder -> {

builder.addInclude("sys_user") // 设置需要生成的表名

.addTablePrefix("t_", "c_"); // 设置过滤表前缀

})

.templateEngine(new FreemarkerTemplateEngine()) // 使用Freemarker引擎模板,默认的是Velocity引擎模板

.execute();

}

}springboot启动类上加mybatis-plus注解

@SpringBootApplication

@MapperScan("com.study.shardingspherejdbc.mapper")

public class ShardingSphereJdbcApplication {

public static void main(String[] args) {

SpringApplication.run(ShardingSphereJdbcApplication.class, args);

}

}test文件内测试

@Autowired

private ISysUserService iSysUserService;

/**

* 插入数据测试,三个数据库都保存了该数据

*/

@Test

void testInsert() {

SysUser sysUser = new SysUser();

sysUser.setLoginName("canace1");

sysUser.setUserName("admin");

sysUser.setPhoneName("12345678999");

sysUser.setPassword("123456");

sysUser.setSalt("1234");

iSysUserService.save(sysUser);

}

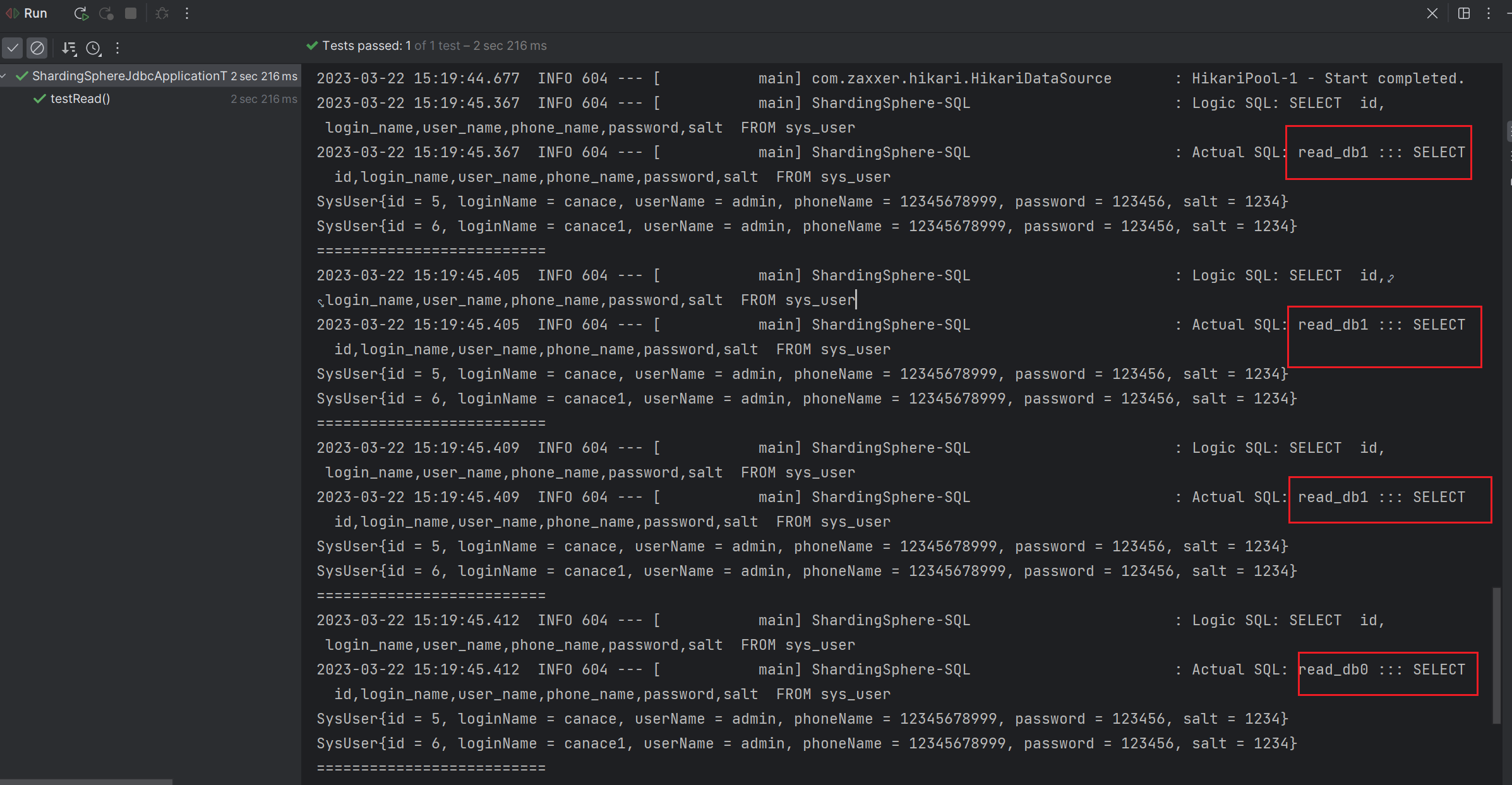

/**

* 读取测试,数据从两个读数据库中随机读取

*/

@Test

void testRead(){

for (int i = 0; i < 5; i++) {

List<SysUser> sysUsers = iSysUserService.list();

sysUsers.forEach(System.out::println);

System.out.println("==========================");

}

}

事务情况测试

/**

* 没有使用事务测试,存入写数据库write_db,从读数据中读取

*/

@Test

void testNoTransactional() {

SysUser sysUser = new SysUser();

sysUser.setLoginName("canace2");

sysUser.setUserName("admin2");

sysUser.setPhoneName("12345678999");

sysUser.setPassword("123456");

sysUser.setSalt("1234");

iSysUserService.save(sysUser);

System.out.println("==========================");

List<SysUser> sysUsers = iSysUserService.list();

sysUsers.forEach(System.out::println);

}

/**

* 使用事务测试,该数据由于事务没有提交,没有存入到任何数据库

* 但是在读取时会从读数据库中读,并且读到该数据

* Actual SQL: write_db ::: SELECT id,login_name,user_name,phone_name,password,salt FROM sys_user

*/

@Test

@Transactional

void testTransactional() {

SysUser sysUser = new SysUser();

sysUser.setLoginName("canace3");

sysUser.setUserName("admin3");

sysUser.setPhoneName("12345678999");

sysUser.setPassword("123456");

sysUser.setSalt("1234");

iSysUserService.save(sysUser);

System.out.println("==========================");

List<SysUser> sysUsers = iSysUserService.list();

sysUsers.forEach(System.out::println);

}

负载均衡策略配置

rules:

# 读写分离

- !READWRITE_SPLITTING

dataSources:

readwrite_ds:

staticStrategy:

writeDataSourceName: write_db

readDataSourceNames:

- read_db0

- read_db1

#负载均衡算法名称

loadBalancerName: weight

# loadBalancerName: random

# loadBalancerName: round_robin

# 负载均衡算法配置

loadBalancers:

weight:

type: WEIGHT #权重

props:

read_db0: 1 #两个读数据库进行1:2的权重进行读取

read_db1: 2

# round_robin:

# type: ROUND_ROBIN #轮转

# props:

# transaction-read-query-strategy: FIXED_PRIMARY

#transaction-read-query-strategy说明:所以策略都有这个,事务内读请求路由策略,可选值:FIXED_PRIMARY(路由到 primary)、FIXED_REPLICA(根据随机策略选择一个固定的 replica)、DYNAMIC_REPLICA(根据随机策略路由到不同的 replica),默认值:FIXED_PRIMARY。

# random:

# type: RANDOM #随机- 轮转负载均衡算法(ROUND_ROBIN)

- 随即负载均衡算法(RANDOM)

- 权重负载均衡算法(WEIGHT)

分库分表实现

创建新容器

docker run -d \

-p 3309:3306 \

-v /wolfcode/mysql/customer_data0/conf:/etc/mysql/conf.d \

-v /wolfcode/mysql/customer_data0/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root \

--name customer_data0 \

mysql

#进入从节点容器,并登录mysql

docker exec -it customer_data0 /bin/bash

mysql -uroot -proot创建数据库

create database customer_data;

use customer_data;

CREATE TABLE `bus_statement` (

`statement_no` bigint(0) NOT NULL AUTO_INCREMENT,

`customer_name` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`customer_phone` bigint(0) NULL DEFAULT NULL,

`actual_arrival_time` datetime(0) NULL DEFAULT NULL,

`service_type` bigint(0) NULL DEFAULT NULL,

`status` int(255) UNSIGNED ZEROFILL NULL DEFAULT NULL,

`total_amount` decimal(10, 0) NULL DEFAULT NULL,

`create_time` datetime(0) NULL DEFAULT NULL,

`info` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`statement_no`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;生成bus_satatement相关代码

@Test

void generatorDomain() {

FastAutoGenerator.create("jdbc:mysql://192.168.31.128:3309/customer_data?serverTimezone=UTC&useSSL=false", "root", "root")

.globalConfig(builder -> {

builder.author("canace") // 设置作者

// .enableSwagger(f) // 开启 swagger 模式

.fileOverride() // 覆盖已生成文件

.outputDir("F:\\JavaFile\\ShardingSphereJDBC\\src\\main\\java"); // 指定输出目录

})

.packageConfig(builder -> {

builder.parent("com.study.shardingspherejdbc") // 设置父包名

.moduleName(null) // 设置父包模块名

.pathInfo(Collections.singletonMap(OutputFile.xml, "F:\\JavaFile\\ShardingSphereJDBC\\src\\main\\resources\\mapper")); // 设置mapperXml生成路径

})

.strategyConfig(builder -> {

builder.addInclude("bus_statement") // 设置需要生成的表名

.addTablePrefix("t_", "c_"); // 设置过滤表前缀

})

.templateEngine(new FreemarkerTemplateEngine()) // 使用Freemarker引擎模板,默认的是Velocity引擎模板

.execute();

}新增yaml信息

customer_data0:

dataSourceClassName: com.zaxxer.hikari.HikariDataSource

driverClassName: com.mysql.jdbc.Driver

jdbcUrl: jdbc:mysql://192.168.31.128:3309/customer_data?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=Asia/Shanghai&useSSL=false&allowPublicKeyRetrieval=true

username: root

password: root

#进行分片

- !SHARDING

tables:

bus_statement: #表名

actualDataNodes: customer_data0.bus_statement # 由数据源名 + 表名组成(参考 Inline 语法规则)垂直分表

/**

* 测试垂直分表,两个不同的表正确插入自己的表中

*/

@Test

void testVerticalShardingWrite(){

SysUser sysUser = new SysUser();

sysUser.setLoginName("canace4");

sysUser.setUserName("admin4");

sysUser.setPhoneName("12345678999");

sysUser.setPassword("123456");

sysUser.setSalt("1234");

iSysUserService.save(sysUser);

BusStatement busStatement = new BusStatement();

busStatement.setCustomerName("canace");

busStatement.setCustomerPhone(12345678888L);

busStatement.setActualArrivalTime(new Date());

busStatement.setCreateTime(new Date());

busStatement.setServiceType(0L);

busStatement.setTotalAmount(new BigDecimal(588));

busStatement.setInfo("无");

iBusStatementService.save(busStatement);

}

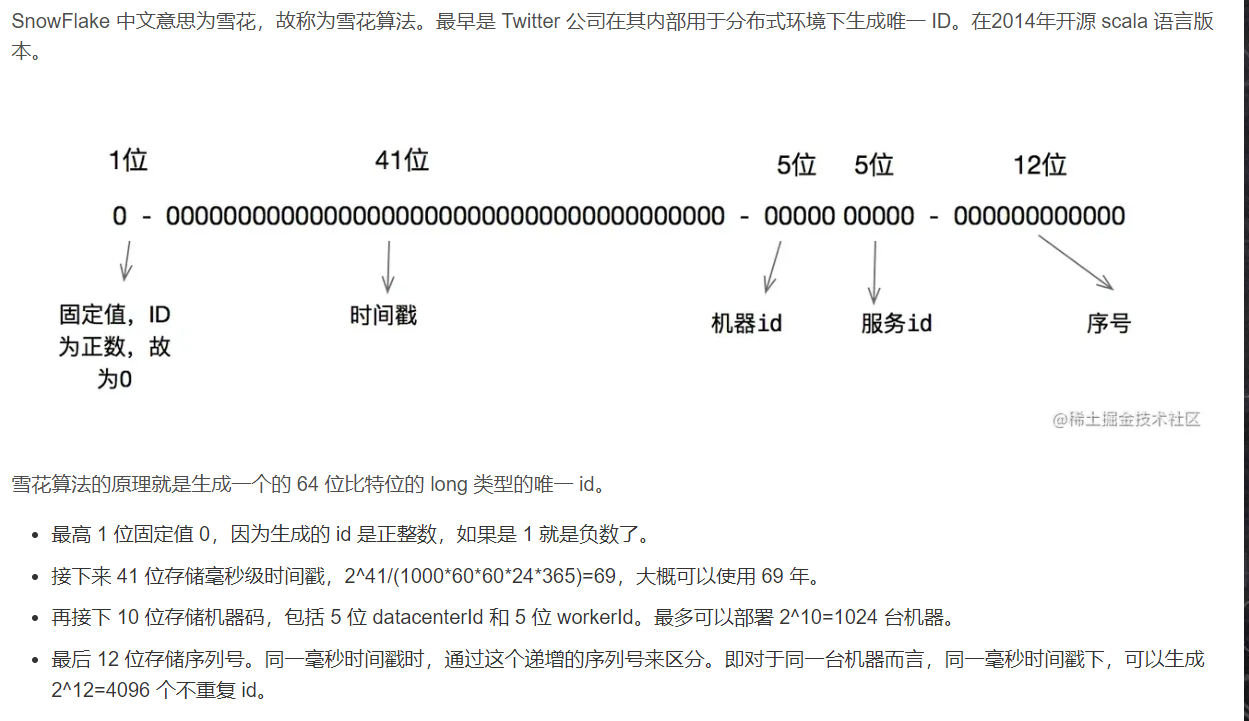

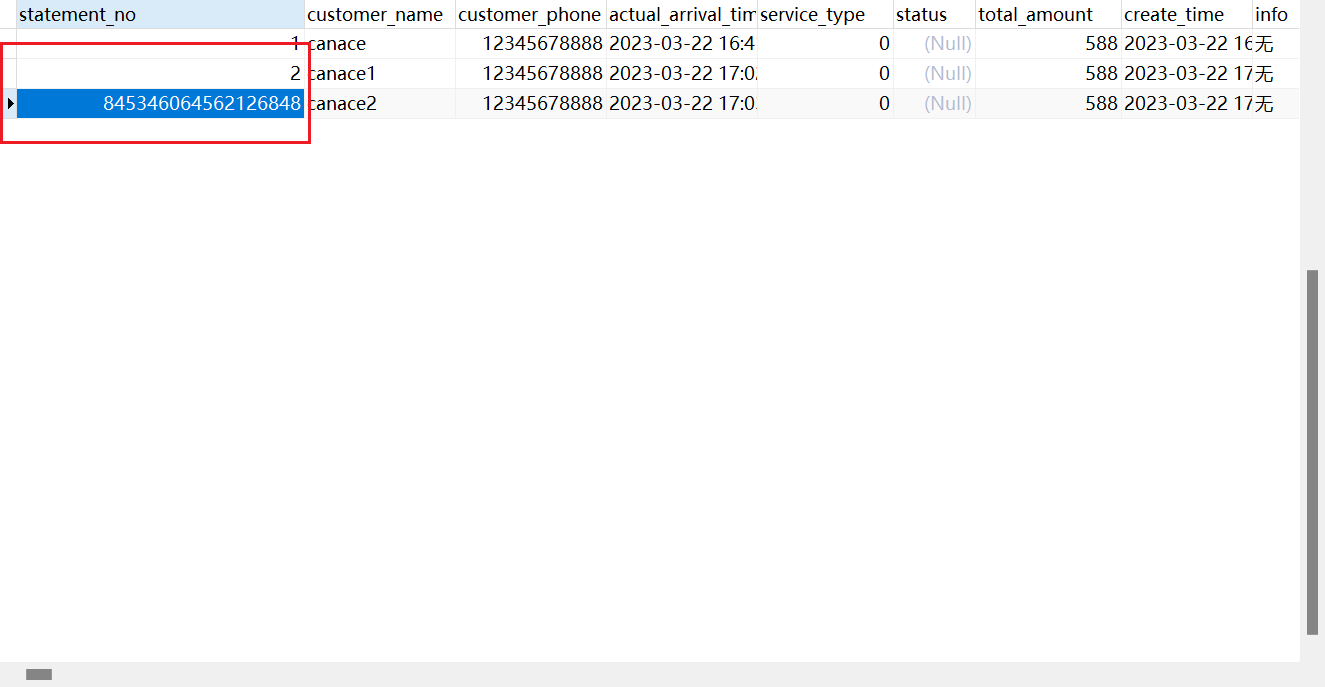

分布式序列ID

现在的服务基本是分布式、微服务形式的,而且大数据量也导致分库分表的产生,对于水平分表就需要保证表中 id 的全局唯一性。

对于 MySQL 而言,一个表中的主键 id 一般使用自增的方式,但是如果进行水平分表之后,多个表中会生成重复的 id 值。那么如何保证水平分表后的多张表中的 id 是全局唯一性的呢?

雪花算法是其中一个用于解决分布式 id 的高效方案,也是许多互联网公司在推荐使用的。

#进行分片

- !SHARDING

tables:

bus_statement: #表名

actualDataNodes: customer_data0.bus_statement # 由数据源名 + 表名组成(参考 Inline 语法规则)

keyGenerateStrategy: #分布式序列策略

column: statement_no # 自增列名称,缺省表示不使用自增主键生成器

keyGeneratorName: snowflake # 分布式序列算法名称 雪花算法

keyGenerators:

snowflake:

type: SNOWFLAKE /**

* 测试雪花算法

*/

@Test

void testSnowFlake(){

BusStatement busStatement = new BusStatement();

busStatement.setCustomerName("canace2");

busStatement.setCustomerPhone(12345678888L);

busStatement.setActualArrivalTime(new Date());

busStatement.setCreateTime(new Date());

busStatement.setServiceType(0L);

busStatement.setTotalAmount(new BigDecimal(588));

busStatement.setInfo("无");

iBusStatementService.save(busStatement);

}

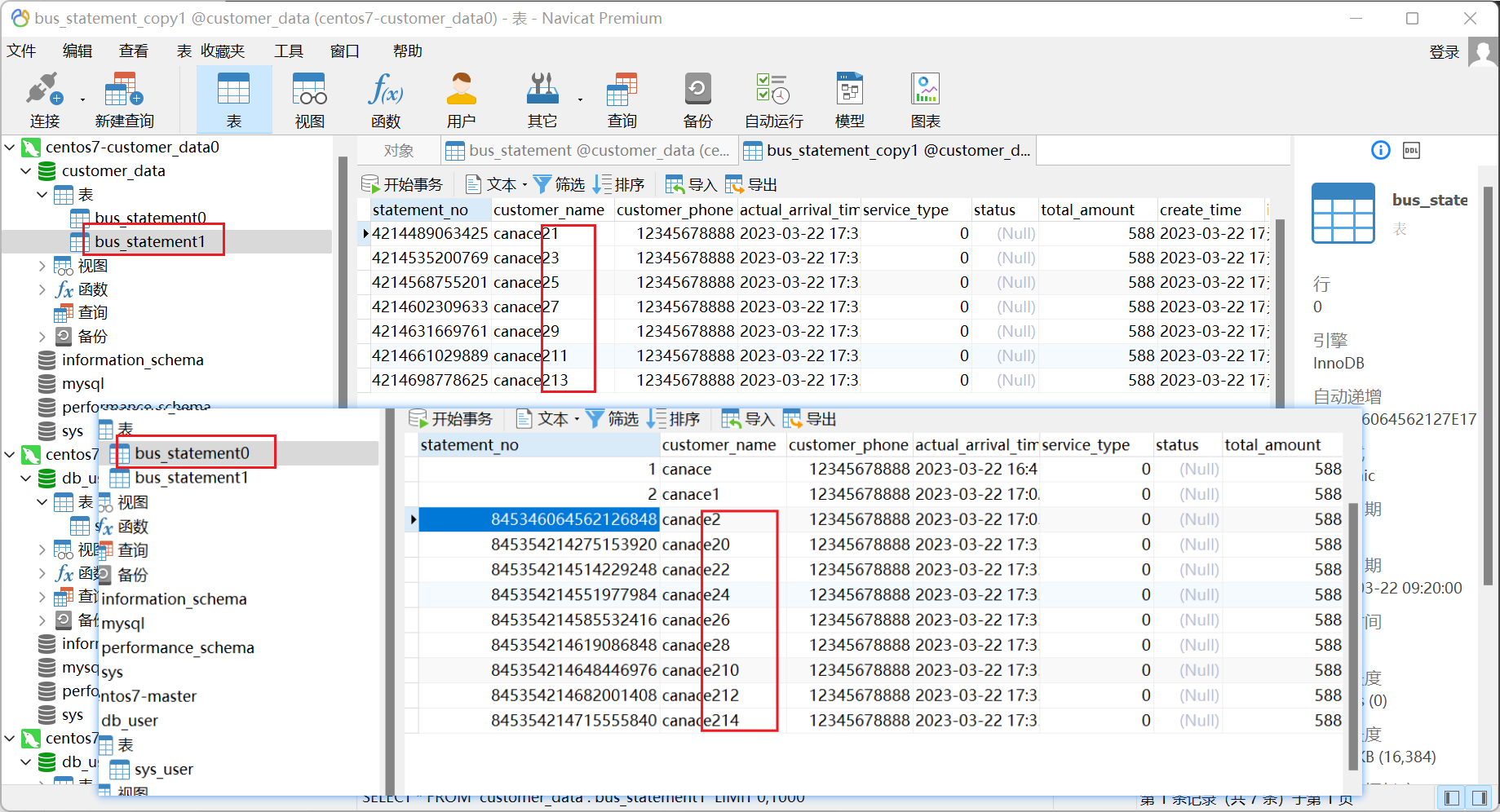

水平分表

复制表bus_statement为bus_statement1,之前表改为bus_statement0

#进行分片

- !SHARDING

tables:

bus_statement: #表名

# actualDataNodes: customer_data0.bus_statement # 由数据源名 + 表名组成(参考 Inline 语法规则)

actualDataNodes: customer_data0.bus_statement$->{0..1} #支持多表,即bus_statement0和bus_statement1

tableStrategy: # 分表策略,同分库策略

standard: # 用于单分片键的标准分片场景

shardingColumn: statement_no # 分表列名称

shardingAlgorithmName: t_mod # 分表算法名称

keyGenerateStrategy: #分布式序列策略

column: statement_no # 自增列名称,缺省表示不使用自增主键生成器

keyGeneratorName: snowflake # 分布式序列算法名称 雪花算法

# 分片算法配置

shardingAlgorithms:

t_mod:

type: MOD

props:

sharding-count: 2

keyGenerators:

snowflake:

type: SNOWFLAKE /**

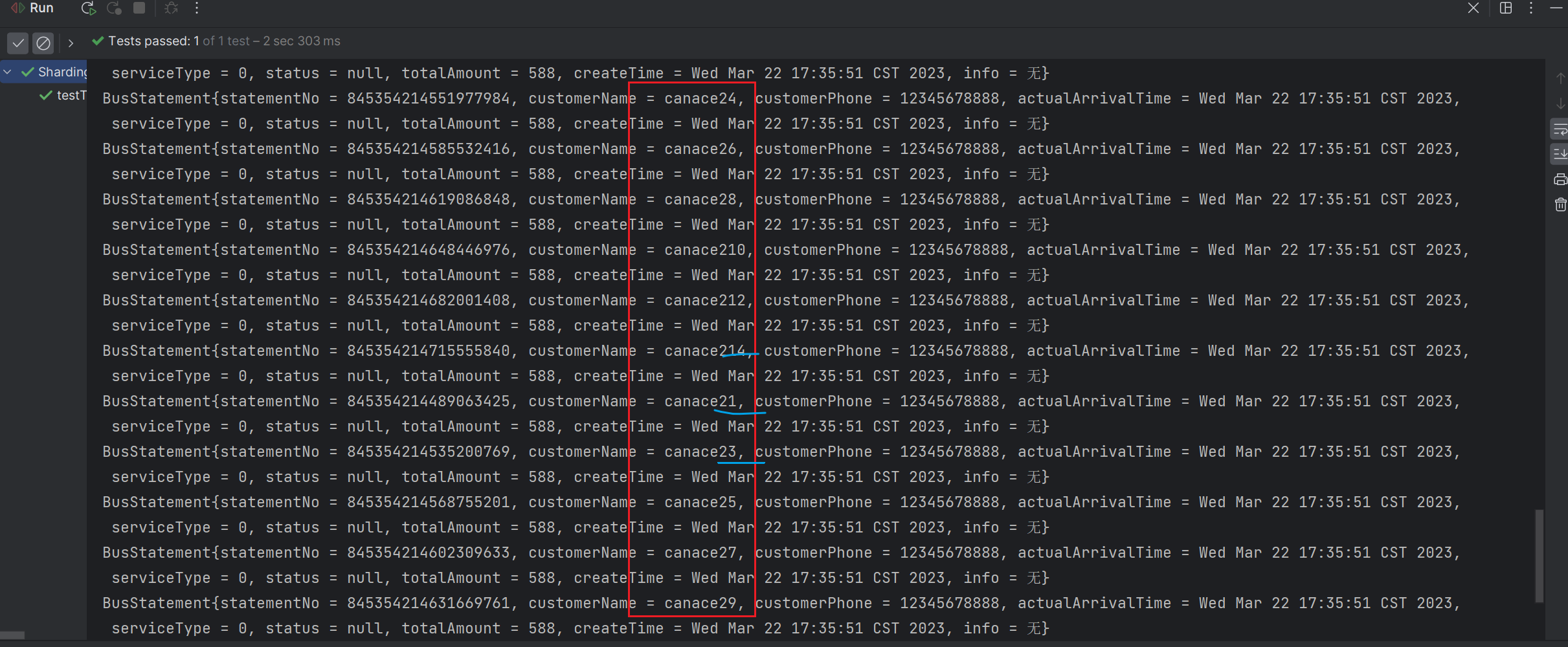

* 水平分表测试

*/

@Test

void testTableShardingInsert(){

for (int i = 0; i < 15; i++) {

BusStatement busStatement = new BusStatement();

busStatement.setCustomerName("canace2"+ i);

busStatement.setCustomerPhone(12345678888L);

busStatement.setActualArrivalTime(new Date());

busStatement.setCreateTime(new Date());

busStatement.setServiceType(0L);

busStatement.setTotalAmount(new BigDecimal(588));

busStatement.setInfo("无");

iBusStatementService.save(busStatement);

}

}

/**

* 水平分表测试数据是否都查询出来了

*/

@Test

void testTableShardingRead(){

List<BusStatement> list = iBusStatementService.list();

list.forEach(System.out::println);

}

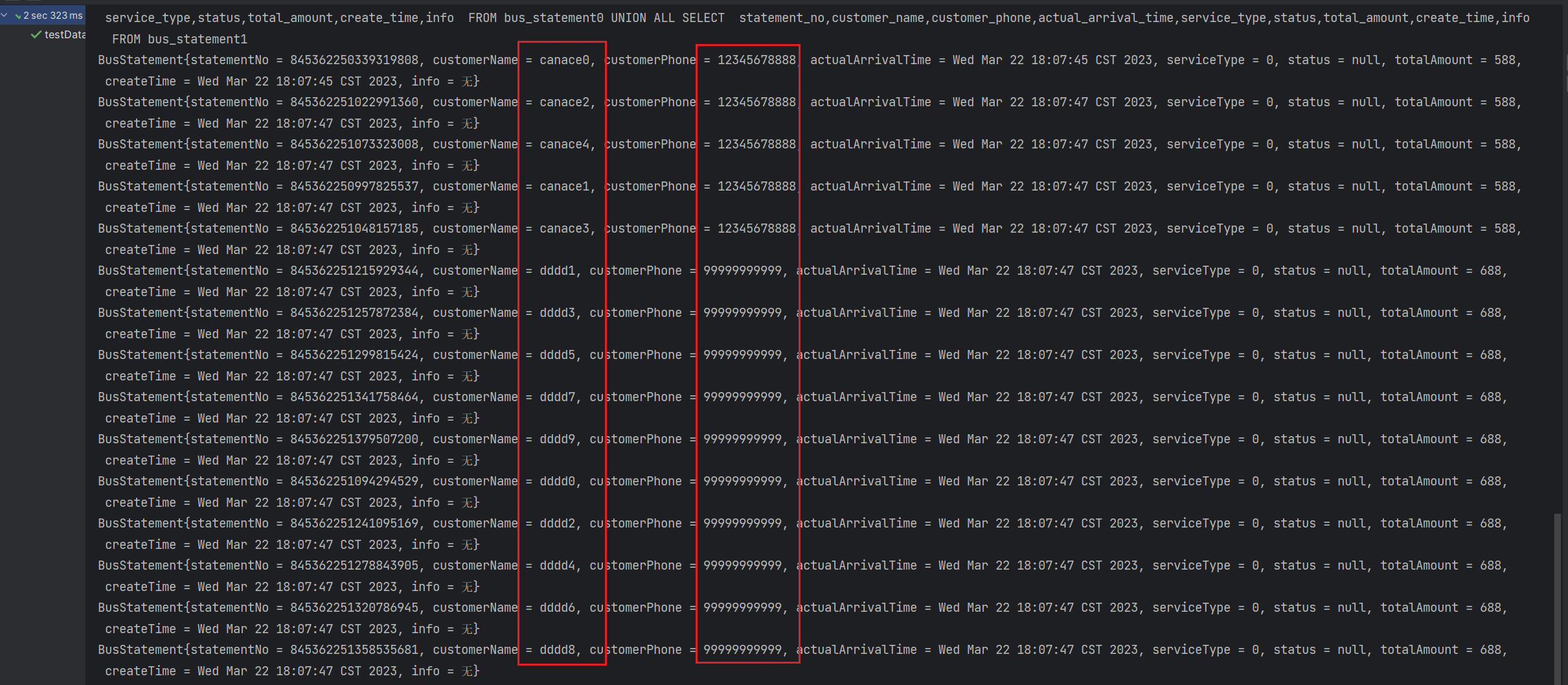

水平分库

创建新容器

docker run -d \

-p 3310:3306 \

-v /wolfcode/mysql/customer_data1/conf:/etc/mysql/conf.d \

-v /wolfcode/mysql/customer_data1/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root \

--name customer_data1 \

mysql创建表

create database customer_data;

use customer_data;

CREATE TABLE `bus_statement0` (

`statement_no` bigint(0) NOT NULL AUTO_INCREMENT,

`customer_name` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`customer_phone` bigint(0) NULL DEFAULT NULL,

`actual_arrival_time` datetime(0) NULL DEFAULT NULL,

`service_type` bigint(0) NULL DEFAULT NULL,

`status` int(255) UNSIGNED ZEROFILL NULL DEFAULT NULL,

`total_amount` decimal(10, 0) NULL DEFAULT NULL,

`create_time` datetime(0) NULL DEFAULT NULL,

`info` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`statement_no`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

CREATE TABLE `bus_statement1` (

`statement_no` bigint(0) NOT NULL AUTO_INCREMENT,

`customer_name` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`customer_phone` bigint(0) NULL DEFAULT NULL,

`actual_arrival_time` datetime(0) NULL DEFAULT NULL,

`service_type` bigint(0) NULL DEFAULT NULL,

`status` int(255) UNSIGNED ZEROFILL NULL DEFAULT NULL,

`total_amount` decimal(10, 0) NULL DEFAULT NULL,

`create_time` datetime(0) NULL DEFAULT NULL,

`info` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`statement_no`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;配置

customer_data1:

dataSourceClassName: com.zaxxer.hikari.HikariDataSource

driverClassName: com.mysql.jdbc.Driver

jdbcUrl: jdbc:mysql://192.168.31.128:3310/customer_data?useUnicode=true&characterEncoding=UTF-8&allowMultiQueries=true&serverTimezone=Asia/Shanghai&useSSL=false&allowPublicKeyRetrieval=true

username: root

password: root

#进行分片

- !SHARDING

tables:

bus_statement: #表名

# actualDataNodes: customer_data0.bus_statement # 由数据源名 + 表名组成(参考 Inline 语法规则)

actualDataNodes: customer_data$->{0..1}.bus_statement$->{0..1} #支持多表,即bus_statement0和bus_statement1

tableStrategy: # 分表策略

standard: # 用于单分表键的标准分表场景

shardingColumn: statement_no # 分表列名称

shardingAlgorithmName: t_mod # 分表算法名称

databaseStrategy: #分库策略

standard: # 用于单分片键的标准分片场景

shardingColumn: customer_phone # 分片列名称

shardingAlgorithmName: t_inline # 分片算法名称

keyGenerateStrategy: #分布式序列策略

column: statement_no # 自增列名称,缺省表示不使用自增主键生成器

keyGeneratorName: snowflake # 分布式序列算法名称 雪花算法

# 分片算法配置

shardingAlgorithms:

t_mod:

type: MOD

props:

sharding-count: 2

t_inline:

type: INLINE

props:

algorithm-expression: customer_data$->{customer_phone % 2}

keyGenerators:

snowflake:

type: SNOWFLAKE /**

* 水平分片测试,查看是否按照手机号码将数据分片到两个数据库中

*/

@Test

void testDatabaseShardingInsert(){

for (int i = 0; i < 5; i++) {

BusStatement busStatement = new BusStatement();

busStatement.setCustomerName("canace"+ i);

busStatement.setCustomerPhone(12345678888L);

busStatement.setActualArrivalTime(new Date());

busStatement.setCreateTime(new Date());

busStatement.setServiceType(0L);

busStatement.setTotalAmount(new BigDecimal(588));

busStatement.setInfo("无");

iBusStatementService.save(busStatement);

}

for (int i = 0; i < 10; i++) {

BusStatement busStatement = new BusStatement();

busStatement.setCustomerName("dddd"+ i);

busStatement.setCustomerPhone(99999999999L);

busStatement.setActualArrivalTime(new Date());

busStatement.setCreateTime(new Date());

busStatement.setServiceType(0L);

busStatement.setTotalAmount(new BigDecimal(688));

busStatement.setInfo("无");

iBusStatementService.save(busStatement);

}

}

/**

* 查看两个库两张表数据都查出来了

*/

@Test

void testDatabaseShardingRead(){

List<BusStatement> list = iBusStatementService.list();

list.forEach(System.out::println);

}

分片算法

自动分片算法

- 取模分片算法 MOD

- 哈希取模分片算法 HASH_MOD

- 基于分片容量的范围分片算法 VOLUME_RANGE

- 基于分片边界的范围分片算法 BOUNDARY_RANGE

- 自动时间段分片算法 AUTO_INTERVAL

标准分片算法

- 行表达式分片算法 INLINE

- 时间范围分片算法 INTERVAL

- 基于Cosld的固定时间范围的分片算法 COSID_INTERVAL

- 基于Cosld的雪花ID固定时间范围的分片算法 COSID_INTERVAL_SNOWFLAKE

- 基于Cosld的取模分片算法 COSID_MOD

- 复合分片算法 COMPLEX_INLINE

- Hint分片算法 HINT_INLINE

- 自定义类分片算法

评论